Overview

The AI industry is rapidly evolving, with large language models (LLMs) like GPT-4, Claude, Gemini, and DeepSeek-R1 pushing the boundaries of natural language understanding, reasoning, and automation. Open-weight models (Llama 3, Mistral, DeepSeek,Gemma) now rival proprietary ones, enabling businesses and developers to leverage AI without vendor lock-in.

Why Local Deployment ?

- Privacy & Security: Keep sensitive data on-premises, avoiding third-party cloud risks.

- Cost Efficiency: Reduce API costs for high-volume usage.

- Customization: Fine-tune models for domain-specific tasks.

- Offline Access: Ensure AI availability without internet dependency.

- Full Control: Avoid rate limits and policy changes from cloud providers.

- With tools like Ollama, running LLMs locally has become seamless, making self-hosted AI a viable alternative to cloud-based solutions.

Prerequisites:

Before we begin, make sure you have:

- A Linux server with GPU acceleration(GPU recommended)

- Docker installed

- At least 8GB RAM (higher recommended)

- Sufficient storage space (the model is several GB in size)

Although you have various options regarding choosing LLM models but for this tutorial we will be using DeepSeek-R1 Model .You can explore more models by visiting Ollama’s official Site,Now to deploy the DeepSeek-R1 model on your local server using Ollama, follow these steps:

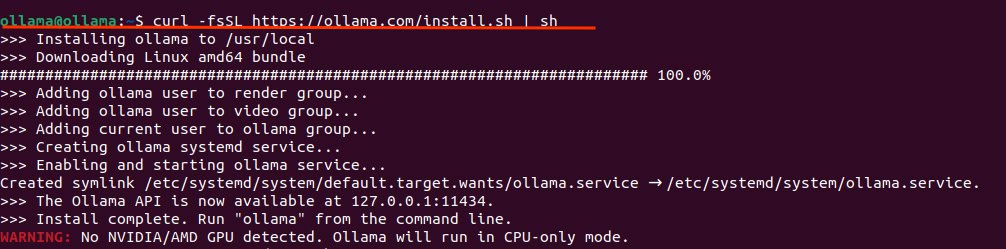

Install Ollama

Ollama is a framework for running large language models (LLMs) locally. It is cross-platform (macOS, Windows, Linux), supports pre-packaged models, and is simple to install.

Run the following command in your terminal to install Ollama:

curl -fsSL https://ollama.com/install.sh | sh

Install Ollama

sudo systemctl start ollama

Verify Installation

Run the following command in your terminal to verify installation of Ollama:

ollama –version

Use Ollama to pull the DeepSeek-R1 Model

Ollama allows you to run various open-source LLMs.Now that Ollama is installed, we need to download the DeepSeek-R1 models.You can download models of your choice(deepseek-r1,deepseek-r1:7b,deepseek-v3 etc) using Ollama CLI:

ollama pull deepseek-r1

Verify Downloaded Model

Run the following command to confirm that deepseek model was downloaded successfully:

ollama list

You will see all the models downloaded :

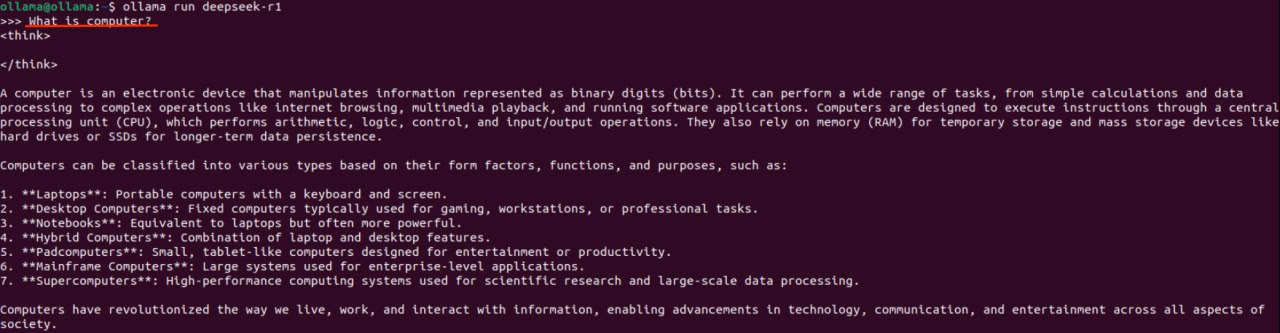

Run DeepSeek-R1 Locally Using CLI

Since the models are downloaded, you can run them locally with Ollama. You can start each model using the following commands:

ollama run deepseek-r1

Once started, the model will run locally, and you can interact with it via the command line.

Model’s Response

When you trigger the model by providing prompt, you can watch it run and start using reasoning to produce an answer.

This procedure demonstrates how the model determines what message to provide and is shown under the tag.

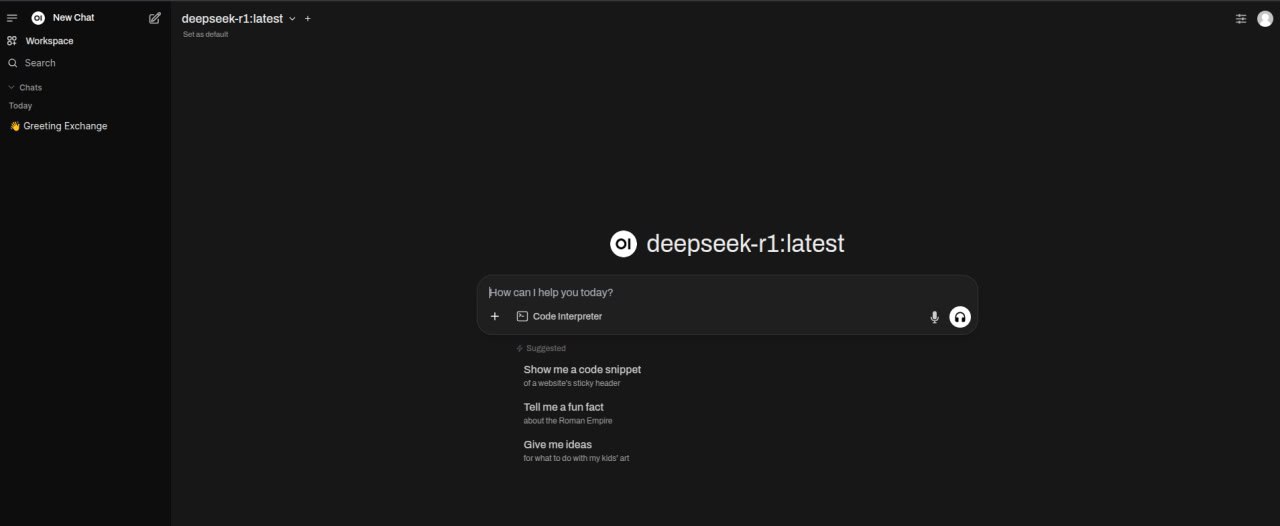

Setup WebUI

For an improved experience, you can use a web-based interface in place of the CLI. Make sure Docker is installed on your computer in order to set up the environment for deploying the Open WebUI.

This enables a simple, browser-based user interface for managing and querying your models.

First, ensure that Docker is present in your system by running:

docker —version

Deploy the Open WebUI Using Docker

For more effective model interaction, we’ll implement Open WebUI (previously Ollama Web UI)

To make sure the container can connect to the Ollama server on your host computer, use the Docker command below to map container port 8080 to host port 3000.

Run the following Docker command :

docker run -d -p 3000:8080 –add-host=host.docker.internal:host-gateway -v open–

webui:/app/backend/data –name open-webui –restart always ghcr.io/open-webui/open-webui:main

Now, the WebUI has been successfully deployed, which can be accessed at http://<server-ip>:3000

Configure Nginx for Ollama API and WebUI [Optional]

Nginx can also be set up as a reverse proxy to safely expose Open WebUI and Ollama’s API through a single domain with HTTPS. This way, external users can engage with the local AI model through a stable, hygienic web interface without having to expose internal ports.

server {

listen 80;

server_name your-domain.com;

location / {

proxy_pass http://localhost:3000;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location /api {

proxy_pass http://localhost:11434;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

}

Conclusion

You now have a fully functional DeepSeek-R1 model running locally on your server with a web interface! You can interact with it through:

- The command line: ollama run deepseek-r1

- The API endpoint: http://your-domain.com/api

- The web UI: http://your-domain.com