Vanilla RAG (Retrieval-Augmented Generation) is a model that combines two main processes—retrieval and generation—into a single framework to improve the quality of text generation. In this approach, the model first retrieves relevant pieces of information from an external knowledge base or large dataset, which can include documents, articles, or other text sources. Then, it uses a generative model, typically a language model like GPT or Llama, to create a response based on the retrieved information. The key feature of Vanilla RAG is its simplicity, as it does not include additional complex optimizations or modifications, making it a basic version of the retrieval-augmented generation architecture. This method helps generate responses that are more accurate and contextually grounded by relying on external knowledge, which is especially useful for answering questions or generating content that requires up-to-date or specialized information.

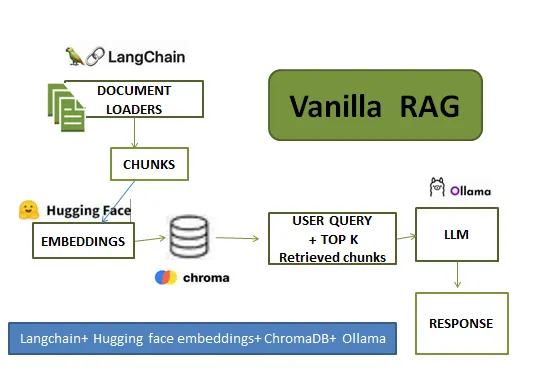

How it works:

Vanilla RAG operates in several steps. First, the system loads the documents from a knowledge base and divides them into smaller, manageable chunks to ensure efficient processing. These chunks are then converted into numerical representations, called embeddings, using a text embedding model. The embeddings are stored in a vector database, which serves as a repository for the processed text. When a query is made, the system retrieves the most relevant chunks from the database. Finally, a language model uses the retrieved information to generate a coherent and contextually appropriate response.

Focus: Text-based documents. Vanilla RAG is limited to handling only text-based data, making it unsuitable for scenarios that require multimodal information like images, tables, or audio.

Scenario:

Suppose a botanist uses a Vanilla RAG system and asks the same question: “Tell me about the sunflower and its pollination.”

The system would only retrieve textual information about sunflowers from its knowledge base, such as a written description of their appearance, habitat, and pollination process. It would completely miss the opportunity to provide visual identification (images of sunflowers), detailed bloom data (tables), or audio clips of bees interacting with the flower.

As a result, the response would be incomplete and less informative, lacking the multidimensional insights that could have been gained through images, tables, or audio. This limitation makes Vanilla RAG unsuitable for tasks requiring rich, multimodal understanding.

Multimodal RAG:

Multimodal RAG (Retrieval-Augmented Generation) extends the basic RAG architecture by incorporating multiple types of data or modalities, such as text, images, or even audio, into the retrieval and generation process. In this approach, the model retrieves not only relevant text but also other forms of information that could be useful for generating a more accurate or contextually appropriate response. For example, it might retrieve an image alongside text to help answer a question that requires visual context. The generative model then synthesizes both the retrieved textual and non-textual information to generate a comprehensive and multimodal response. This makes Multimodal RAG capable of handling more complex tasks that require understanding and integrating different forms of data.

How it works:

Multimodal RAG integrates both text and image data to generate comprehensive responses. It uses a multimodal large language model (LLM) that can understand and process both text and images. Text is converted into numerical representations using a text embedding model, while images are transformed into embeddings using an image embedding model, such as CLIP. These embeddings are stored in a unified vector database, which holds both text and image data. The knowledge base combines textual documents and images, enabling diverse retrieval options. Finally, a prompt template helps guide the multimodal LLM to generate coherent and contextually rich responses by effectively synthesizing the retrieved data.

Focus: Handles multiple types of data (text + images + tables+audio). Multimodal RAG excels in handling diverse data types, such as text, images, tables, and audio, making it ideal for scenarios requiring rich, multidimensional insights.

Scenario:

Imagine the botanist asks the Multimodal RAG system, “Tell me about the sunflower and its pollination.”

The system retrieves a textual description of the sunflower’s characteristics, a high-quality image for visual identification, a table showing bloom times and preferred habitats, and an audio clip of bees pollinating the flower. It then synthesizes this information into a comprehensive response that combines textual knowledge, visual context, structured data, and auditory insights.

This ability to integrate and present multiple data types ensures that the botanist gets a rich and holistic understanding of the sunflower and its pollination, making Multimodal RAG a powerful tool for complex, real-world scenarios.

Conclusion

- Vanilla RAG:

Best for generating responses based on external text information, such as customer support or content generation. It excels in tasks where pulling relevant text from a database improves response quality. - Multimodal RAG:

Ideal for handling complex, specialized, or multimodal data (e.g., images, audio). It is better suited for real-world scenarios requiring the integration of multiple data types.

By choosing the appropriate RAG model based on the data types and task complexity, you can enhance the quality and relevance of generated responses.